Intro

As one of the 3D-N-CAS originators (3D-navigation-computer assisted surgery; June 1994), and pioneers in Tele-3D-CA-NESS (tele-3D-navigation computer assisted endoscopic sinus surgery; October 1998), having implemented these novel technologies for more than two decades now, occasionally we found it impossible to get an ideal perception of the surrounding world of head anatomy during real operations (nose, sinuses, base of the skull), in spite of using the latest technologies (modern CAS/NESS realistic simulations of 3D volume rendering of the real intra operative anatomy, VE/virtual endoscopy, VS/virtual surgery, etc). As we know, this must be feasible completely and throughout the operation time.

Therefore, we understood the need to define a new surgical approach which would entirely satisfy the demands of the surgeon to have the impression of the presence in the virtual world of the human head anatomy and to navigate and manipulate (VS) with the virtual anatomic non-existing environment. Consequently, we improved the application of the touch screen, voice control and intelligence feedback in the OR (operating room; since 2013), with the application of the hardware and original SW (software ) - solutions that enable the surgeon to touch free ('in the air') 3D simulation and motion-control of 2D and 3D-medical images, as well as VE and VS, in real time.

This software is part of our scientific research project and intended purely for demonstration purposes!

Our plug-in for Osirix platform

We are using our software as OsiriX integrated extensions while not interfering with its well-known user interface. We think that OsiriX is well designed software for managing hospital information systems but it requires updates for integration with new technologies and modifications for special use cases. Our idea is to provide a full featured toolset for use in OR environment.

The use of Leap Motion sensor as an interface for camera positioning in 3DVE views, with additional integration of speech recognition as a VC solution, is now possible with our recently developed special plug-in application for OsiriX platform. In this way, using only one hand, different types of gestures for 3DVR enable navigation through virtual 3D space, adjusting viewing angle and camera position. Finally, we minimized surgeon distraction while interacting, with natural movements, with the positioning system in the real-world objects.

Applications

- Preoperative planning

- Intraoperative assistance/guidance

- Postoperative analysis

- Virtual endoscopy (VE)

- Virtual surgery (VS)

- Telesurgery with virtual endoscopy/surgery support

- Education

Telesurgery, as remote visualization of the operative field, in any OR has to allow surgeons not only to transfer 3D computer models and surgical instrument movements with 2D images and 3D-model manipulations, but also to define the pathology, to produce an optimal path to the pathology, and to decide how to perform the real surgery. Using tele-fly-through or tele-VE through 3Dmodels, both surgeons can preview all the characteristics of the region, and so predict and determine the next steps of the operation.

Bitmedix Untouched is OsiriX extension that provides support for LeapMotion sensor enabling more intuitive control of 3D objects rendered from specific studies. Our goal was to introduce contactless interfaces to 3D virtual navigation system through a set of gesture and voice commands. In our solution we use a compact hand-gesture sensor that is mounted in the surgeons' workspace. User can orbit and zoom the rendered volume and control the position of camera in virtual endoscopy.

This software is part of our scientific research project and intended purely for demonstration purposes!

We developed our special plug-in application for OsiriX platform, enabling users to use LM sensor as an interface for camera positioning in 3DVR and VE views, and integrating speech recognition as a VC solution, in an original way, which is not comparable with some projects already found on the market. We have defined different types of gestures for 3DVR and VE, which enable navigation through virtual 3D space, adjusting viewing angle and camera position, using only one hand. Consequently, we have minimized surgeon distraction while interacting with the positioning system. We have chosen gestures that people can easily correlate with natural movements while interacting with real-world objects, making navigation as fast as possible, while maintaining precision and shortening learning time.

Technical details: In the VRen, the x-axis of the vector received from the sensor is translated to the azimuth of the camera, y-axis to the elevation, and z-axis to the zoom. This gives the effect of orbiting over the center of the object. Also, to get better performance, the level of details is lowered when the frame handler is triggered. When the hand exits the sensor working area, the level of details is returned to the maximum value. For the VE, the idea was to achieve a fly-through effect. Firstly, the x- and y-axes are used as the yaw and pitch of the camera. The z-axis is used to increase or decrease the total amount of moving speed. The OsiriX-application programming interface is used to get the current direction and position of the camera. The position of the camera is then translated by the total speed amount in the current direction of the camera. The same translation is also applied to the focal point of the camera.

Aiming at easier navigation through the application and using its functionalities with contactless interfaces, we used VC. We enabled utilizing application functions in an unobtrusive manner, independently of the current active application view, 3DVR or VE. Furthermore, switch VC allows the user to toggle the current active application view. All ROIs in different slices that are associated with each other should be named the same, forming an aggregate that represents a 3D segmentation object. These 3D regions are assigned with a keyword that is used as a VC, enabling the user to actively adjust the visibility states of regions in a visualization preview. It is advisable to mark regions using contrasting colors for easier recognition when multiple ROI segments are present in the current view. Keywords used for VCs are simple and short, which we found to be more performant when using offline voice recognition. This also means that VC recognition is not limited by network speed or availability, thus adding further stability and reliability to the system.

Technical details: The plugin can be compiled in the Xcode IDE on MacOS and then easily installed on the OsiriX platform. After installation, the plugin exists in OsiriX environment waiting for the device to connect. Once the device is connected, the frame handler is waiting for the VR or VE view to be opened. When the view is opened, the sensor is ready to use. This interface is not interfering with any other function of the OsiriX platform and it can be used simultaneously with the classic mouse interface.

Requirements

Hardware requirements:

- Apple computer

- Leapmotion controller (www.leapmotion.com)

Software requirements:

- MacOSX

- OsiriX DICOM

- Bitmedix software

OUR ACTIVITIES

INVITED LECTURES, PRESENTATIONS 2019 (Bitmedix Team members)

1. “EURO-SURGERY-2019” March 2019, Budapest, Hungary, Invited Speaker

2. "2nd Edition of European Conference on Otolaryngology, ENT Conference", May, 2019 Singapore, Honorable Speaker

3. European conference on Ears, Nose and Throat Disorder 2019" Stockholm, Sweden, May, 2019, Honorable Speaker, Workshop Chairman

4. 3rd World Congress on Surgery & Anesthesia, June, 2019 Berlin, Germany, Invited Speaker

5. Otolaryngology-2019, 3rd Global Summit on Otolaryngology-2019, November, 2019, Amsterdam, Netherlands Organising Committe Member, Honorable Speaker

INVITED LECTURES, PRESENTATIONS 2018 (Bitmedix Team members)

1. ENT Conference 2018; Breakthrough to Excellence in ENT Treatment, Dubai, UAE, April 2018, Organizing Committee Member / Invited Speaker and Editorial Board Member / Conference Related Journals

2. ENT Conference 2018; Breakthrough to Excellence in ENT Treatment,Osaka, Japan, May, 2018, Organizing Committee Member / Invited Speaker and Editorial Board Member / Conference Related Journals

3. “EURO-SURGERY-2018”, August, 2018, Prague, Czech Republic, Honorable Speaker

4. 8th International Conference on Otorhinolaryngology; Otorhinolaryngology: Modern Innovations and Clinical Aspects, October, 2018 Rome, Italy, Organizing Committee Member / Invited Speaker

5. “3rd European Otolaryngology-ENT Surgery Conference”, October, 2018, London, UK, Honorable Speaker

6. International Conference on “Head and Neck: The Multidisciplinary Approach, Dubai, December UAE, Invited Honorable Speaker

OUR RESEARCH ARTICLES

What is the future of minimally invasive surgery in rhinology: marker-based virtual reality simulation with touch free surgeon's commands, 3D-surgical navigation with additional remote visualization in the operating room, or ...?

What is the Future of Minimally Invasive Sinus Surgery: Computer-Assisted Navigation, 3D-Surgical Planner, Augmented Reality in the Operating Room with 'in the Air' Surgeon's Commands

Do We Really Need a New Navigation-Noninvasive "on the Fly" Gesture-Controlled Incisionless Surgery?

Utilization Of 3d Medical Imaging and Touch-Free Navigation in Endoscopic Surgery: Does our Current Technologic Advancement Represent the Future Innovative Contactless Noninvasive Surgery in Rhinology? What is Next?

Media gallery

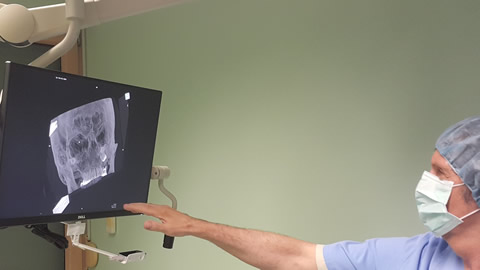

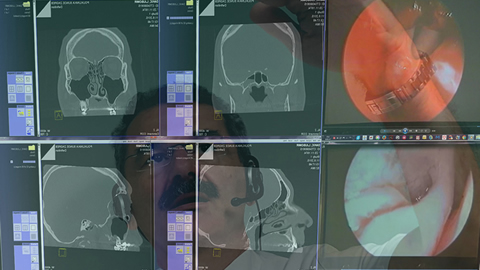

Preop planning 1

Preoperative planning: virtual endoscopy (VE) is a new method of diagnosis using computer processing of 3D image datasets (such as 2D-multislice computed tomography (MSCT) and/or magnetic resonance imaging (MRI) scans) to provide simulated visualization of patient specific organs similar or equivalent to those produced by standard endoscopic procedures (such as in the Klapan Medical Group Polyclinic/Bitmedix medical partrner). Visualization avoids the risks associated with real endoscopy, and when used prior to performing an actual endoscopic examination, it can minimize procedural difficulties.

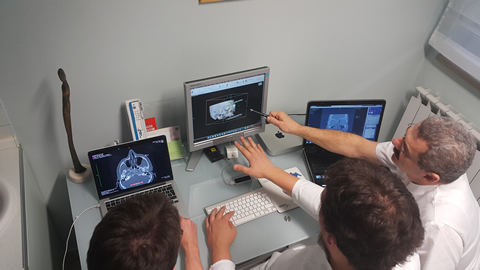

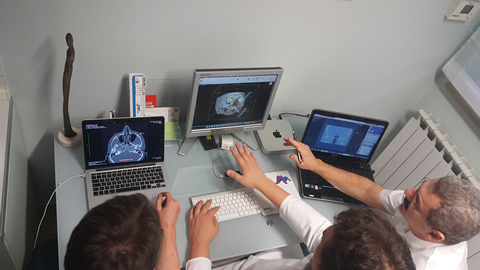

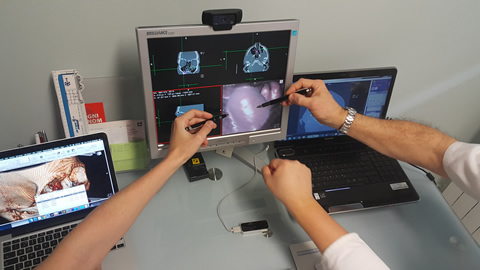

Preop planning 2

Preoperative planning: in order to understand the idea of virtual reality (VR), it is necessary to recognize that the perception of the surrounding world created in our brain is based on the information coming from human senses and with the help of the knowledge that is stored in our brain. The real-time requirement means that the simulation must be able to follow the actions of the user that may be moving in the virtual environment. The computer system must also store in its memory a 3D model of the virtual environment. In that case, a real-time virtual reality system will update the 3D graphic visualization as the user moves (Osirix and Leap Motion with Bitmedix plug-in for Osirix platform), so that up-to-date visualization is always shown on the computer screen as seen (in the test phase, during preoperative planning) in the VR-Lab of the Klapan Medical Group Polyclinic.

Preop planning 3

Preoperative analysi: the navigation in virtual space is achieved by assigning the three orthogonal axes. The first evaluation of our system allowed us to observe users operating 3DVR and VE and adopting the principles of our proposed contactless interface. We propose using the angles of palm position as values that represent speed of camera deflection. This approach would assure more precision while navigating VE space while also reducing working complexity.

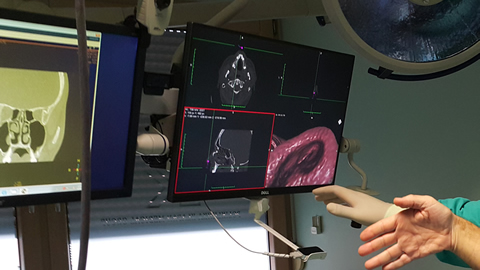

Preop planning 4

Do physicians really believe that improvement of the accuracy of 3D-models generated from 2D-medical images is of greatest importance for the sustainable development of AR and VR in OR? Additionally, one can conclude from "the standard surgical experience" that in terms of future training and surgical practice, information technology developments in OR might include a VS within the same guide user interface using the personal computer-mouse as the scalpel or surgical knife, as commented in some papers (without discussion about sterility in the OR? Technical details: three-way communication between the endo-camera, VE-LP monitors and the surgeon's senses will provide active virtual tracking (with detecting abnormal patterns in rhinologic endoscopy). This enables surgeon's control of the whole OR from one place, with NO touch-screens or additional personnel activities, as well as to run the system and terminate successfully the operation itself. In our experience, we need to find out the 'best clinical practice' per viam the best VR application in rhino-OR.

Preop planning 5

Since the very beginning of this most prestigious field of medicine, surgeons all over the world have always tended to conceive and then implement perfect, ideal conditions in the OR. Improved conditions in surgical practice and considerably longer patient survival have been achieved since the time of Sir Joseph Lister, Bt., a British surgeon and pioneer of antiseptic surgery through the use of numerous innovations in preoperative planning, intraoperative procedures and postoperative analysis of surgical procedures, in particular the use of MIS. At the turn of the 20th and 21st century, a new developmental incentive in surgery emerged with the advent of 3D-visualization of anatomy and pathology and differential-color imaging of various tissues, consistent with transparency difference (pixels) on digital 2D-256-level-gray medical diagnostic images. It was followed by development of 3D-computer assisted surgery (CAS) and navigation CAS (N-CAS) at the beginning of the 1990s with the use of 3D-digitalizers ('robotic arm'), tele-3D-CAS, VR-techniques, and virtual simulators in surgery, facilitating orientation in space (with 'six degrees of freedom') to the pioneers in this type of surgery. Nowadays, at the beginning of the 21st century, the use of CAS/robots in surgery has taken hold, having substantially expanded the limits of operability in many borderline operable or inoperable patients.

Preop planning 6

Previously mentioned possible criticisms of the strategic planning process of our newest ENT-contactless surgery have already been discussed. As we have demonstrated, the navigation-OsiriX-LM suggests that real and virtual objects definitely need to be integrated by use of real 'in the air' control with simulation of virtual activities, which requires real-time visualization of 3D-VE motions, following the action of the surgeon that may be moving in the VR-area. The rules of behavior in this imaginary world are very precisely and simply defined per viam region of interest on 3D volume rendered MRI/MSCT slices (static and interactive dynamic 3D models). We used OsiriX MD (certified for medical use, FDA cleared and CE II labeled; the most widely used DICOM viewer, with advanced post-processing techniques in 2D-3D, as well as for 3D-4D navigation), Leap Motion (computer hardware sensor device that supports hand and finger motions as input, which requires no hand contact or touching), and our specially designed SW that integrates Leap Motion controller with medical imaging systems.

Preop planning 7

In a vast selection of available tools that OsiriX provides to the end-user, there is a subset that significantly simplifies working with ROI segments. Freehand tools like pencil, brush, polygon and other similar shapes enable precise selection of segments, whereas more sophisticated 2D/3D grow region tool can automatically find edges of the selected tissue by analyzing surrounding pixel density and recognizing similarities. Using a graphic tablet as a more natural user interface within the OsiriX image editor can simplify the segmentation routine and improve selection precision.

Preop planning 8

According to our long-standing use of this and similar approaches in clinical practice (diagnostics and surgery/TS) and experiences reported by other authors, we have realized that it is quite simple to enable the animated image of the course of surgery/telesurgery be created in the form of navigation, i.e. the real patient operative field fly-through, as it has been done from the very beginning (since 1998) in our computer assisted-TSs. In this application, it is very easy to integrate real and 3D-virtual objects (with high rendering speed without reducing visual quality), making it necessary to present and manipulate them simultaneously in a single scene, with development of hybrid systems referred to as augmented reality systems. When choosing a ROI or checkpoint to be activated, the user should be presented with a clear 3DVR or VE view with an unobtrusive list of ROIs or checkpoints with associated VCs.

Preop planning 9

The ultimate goal of this innovative surgical procedure is to allow presentation of virtual objects to all of the human (surgeon) senses in a way identical to their natural counterpart. Precise differentiation of pathology and normal soft tissue differences, as well as fine bony details, in ideal conditions, are basic conditions in which our SW can be employed, integrating Leap Motion controller with medical imaging systems only with 'in the air' real control by surgeon's hands. Our experience shows that all this can be done easily during the operative procedure, even with some preoperative analysis if necessary by use of the navigation noninvasive 'on the fly' gesture-controlled incisionless surgical interventions (e.g., based on our original Apple-based Osirix-LM system). Thus, we can state with certainty that the use of this system is highly desirable for enabling contactless 'in the air' surgeon's commands, precise orientation in space, as well as the possibility of directing real patient operation by 'copying' the previously performed VS (with or without navigation with 3D-digitalizer/'robotic hand') in the nonexisting surgical world.

Preop planning 10

"Contactless surgery" makes a highly potent tool available to the physician-surgeon; if used in a rational and proper way, it can change substantially the outcomes of until now complicated and serious operative procedures, relying on the possibility to assess their real feasibility. There is an opportunity to choose from a number of different approaches and performance in future operations, and finally to select the safest, relatively fast and successful approach to the operative field. Just imagine that during the operation, the rhinosurgeon can simply 'navigate' through the oroantral fistula canal (which has been inconceivable to date), perform 3D-assessment of its relation with the roots of other teeth within the maxillary bone, visualize the fistula ostium mucosa in maxillary sinus and its relation to the rest of sinus mucosa, 'enter' the cyst and observe its extent, composition of its content, assess the medically justified degree and range of pathologic cystic tissue removal while preserving the healthy and other vital anatomic elements of the oroantral region of the patient's head. Performing the multiply repeatable different forms of virtual diagnostics very fast, followed by VE and finally VS, offering the possibility of repeating them endlessly, we can 'copy' the entire operative procedure conceived as performed in the VW of the patient's head anatomy, which will then be 'copied' in the same way in the future real operation on the real patient.

Preoperative diagnostic procedure

"There is no reality. Only our personal view on the reality", even in the operation room. If this view of the surrounding world is correct, it is justified for us as physicians to wonder whether our consciousness/awareness is thus creating a new reality or new forms of the reality in diagnostics and surgery. As the formed consciousness "defines our overall comprehension and, briefly, everything that exists, we cannot get behind it" (Max Planck). In the world of modern medicine, this should be achieved through the integral perception of all newly formed parameters in the novel, to date unknown VW of diagnostics and surgery. How can we make the real-time visualization of 3D-VE motions and animated images of the real surgery in our ORs more realistic? Do our colleagues and/or physicians believe that it can be done by pre-(intra)-operative use of DICOM-viewer with hardware (HW)-sensor device that supports hand and finger motions as input, which requires no hand contact or touching ('touch-free commands'), as demonstrated during one of our routine preoperative diagnostic procedures? Even more, this article can be possibly understood by beginners in ENT surgery or colleagues who are not familiar with the application of modern technology in OR, as a review written as the author/authors personal view that states only arguments favoring the topic but adding no new information to the literature or providing clinical insights that are novel and would alter patient treatment. On the contrary, we submit an argumentative essay, not a "case report paper" or "description of standard therapy and knowledge", and we are focused on the development of our original special SW-plugin application for digital imaging and communications in medicine-viewer platform (which cannot be found on the market), enabling users to use LM-sensor as an interface for camera positioning in 3D-VRen and VE-views, which integrate speech recognition as a VC-solution in an original way. This approach has not yet been used in rhinosinusology or ENT, and to our knowledge, not even in general surgery.

Preoperative analysis

The ultimate goal of this innovative surgical procedure is to allow presentation of virtual objects to all of the human (surgeon) senses in a way identical to their natural counterpart. Precise differentiation of pathology and normal soft tissue differences, as well as fine bony details, in ideal conditions, are basic conditions in which our SW can be employed, integrating Leap Motion controller with medical imaging systems only with 'in the air' real control by surgeon's hands. Our experience shows that all this can be done easily during the operative procedure, even with some preoperative analysis if necessary by use of the navigation noninvasive 'on the fly' gesture-controlled incisionless surgical interventions (e.g., based on our original Apple-based Osirix-LM system). Thus, we can state with certainty that the use of this system is highly desirable for enabling contactless 'in the air' surgeon's commands, precise orientation in space, as well as the possibility of directing real patient operation by 'copying' the previously performed VS (with or without navigation with 3D-digitalizer/'robotic hand') in the nonexisting surgical world.

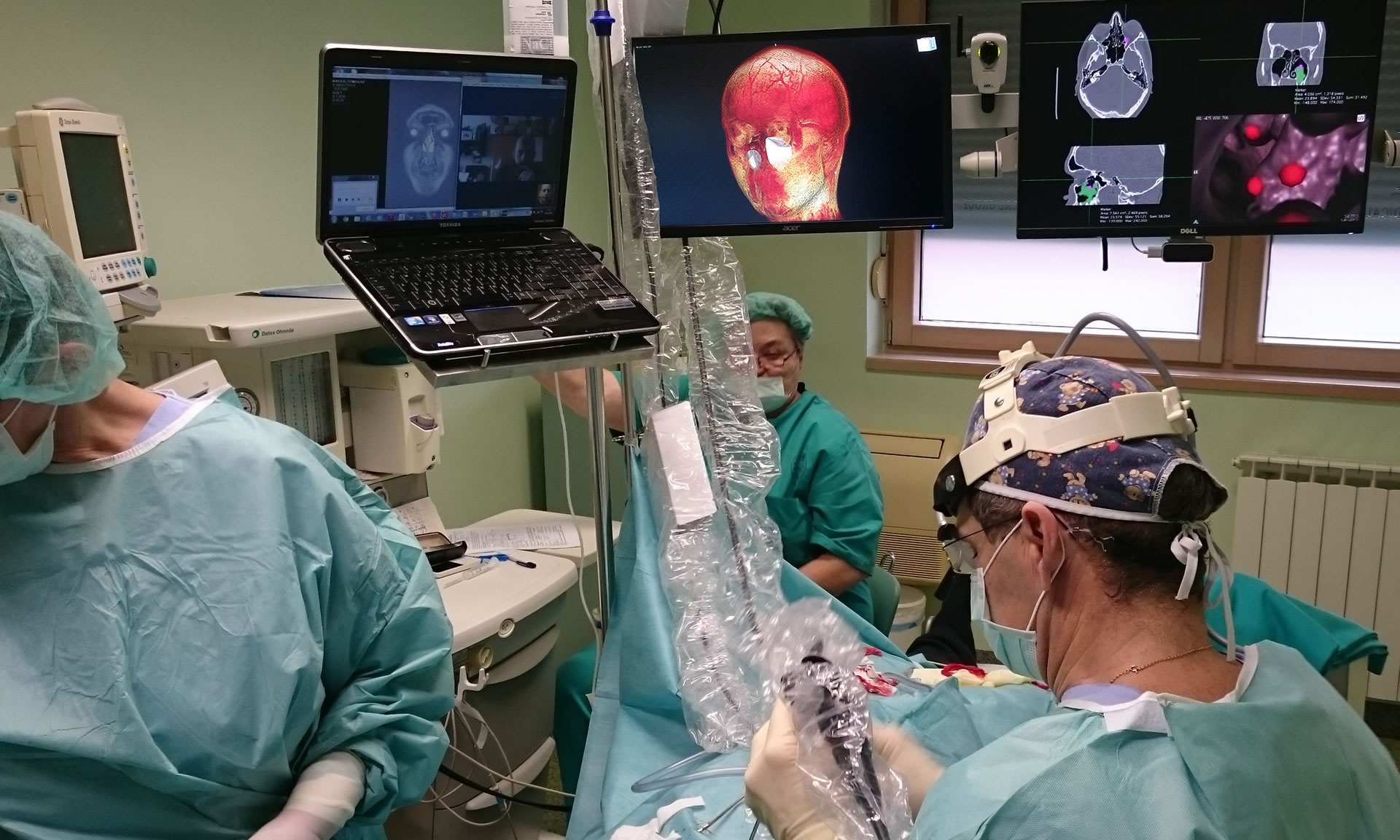

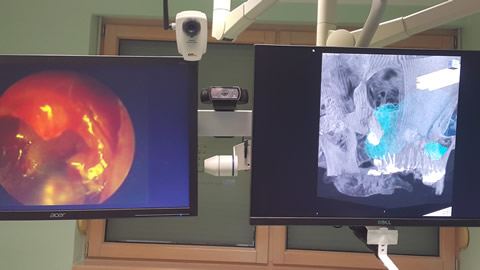

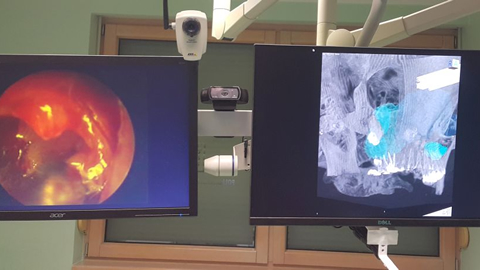

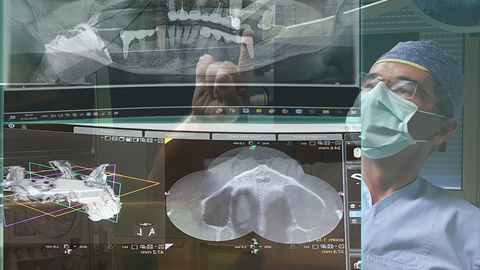

LeapMotion and OsiriX 1

Simulated 3D-reconstruction of organs from MSCT-cross sections is an important diagnostic tool by providing clinicians with a more naturalistic view of a patient's anatomy; the interactivity reflects in the possibility of simulating the endoscope tip movements through an organ cavity, or empty spaces (virtual endoscopy or virtual surgery), and the real-time requirement means that the simulation must be able to follow the actions of the user that may be moving in the virtual environment as seen in the operating room in the surgical department of the Klapan Medical Group Polyclinic.

LeapMotion and OsiriX 2

Even the best preoperative planning is of limited use if its implementation in the OR is not guaranteed; whereas traditionally these plans are transformed mentally by the surgeon during the intervention, computer assistance and virtual reality technology can substantially contribute to the precise execution of preoperative plans. We need a new sinus surgery technique in a daily routine practice? Imagine that the perception system in humans could be deceived, creating an impression of another „external“ world where we can replace the „true reality“ with the „simulated reality“ that enables precise/safer&faster diagnosis/surgery. Of course, we tried to understand the new, visualized virtual world (VW) by creating an impression of virtual perception of the given position of all elements in the patient's head, which does not exist in the real world.

OsiriX ROI segments

In a vast selection of available tools that OsiriX provides to the end-user, there is a subset that significantly simplifies working with ROI segments. Freehand tools like pencil, brush, polygon and other similar shapes enable precise selection of segments, whereas more sophisticated 2D/3D grow region tool can automatically find edges of the selected tissue by analyzing surrounding pixel density and recognizing similarities. Using a graphic tablet as a more natural user interface within the OsiriX image editor can simplify the segmentation routine and improve selection precision.

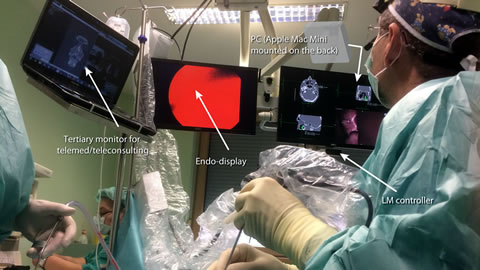

Positioning of the auxiliary staff members

Positioning of the auxiliary staff members is determined by the respective plan as in any standard OR. A substantial precondition to be met on developing this new system was that the auxiliary staff members could approach LM controller without distracting and entering the field of surgeon's work. Native resolution of our monitors is 1920x1080, and they can display original, full HD images in 1920x1080 resolution without blurring or distortion21. For presentation of DICOM images and 3D-rendered models we used Dell Ultrasharp U2413 monitor because it has the highest quality color reproduction, wide-angle viewing, images can be viewed simultaneously, there is very high pixel density and compliance with key medical standards (ICC profiles can be used for reviewing of color medical images). For calibration purposes, we used QUBYX PerfectLum SW. Media1: We were focused on the development of personal-3D-navigation system and application of augmented reality in the operating room per viam personalized contactless hand-gesture non-invasive surgeon-computer interaction, with higher intraoperative safety, reduction of operating time, as well as the length of patient postoperative recovery. But, upon critical scientific consideration of our assessments, we must also pose the following questions: a) our intention is to offer an alternative to closed SW systems for visual tracking, and we want to start an initiative to develop the SW framework that will interface with depth cameras and provide a set of standardized methods for medical applications such as hand gestures and tracking, face recognition, navigation, etc. This SW should be: a) an open source, operation system agnostic, approved for medical use, independent of HW, and b) in the future, part of medical Swarm intelligence (SI) decentralized, self-organized systems, in a variety of VR-fields in clinical medicine and fundamental research (motion gestures in OR and preoperative diagnosis demo); and b) in our future plans, we will discuss additional different aspects that are currently of great interest regarding the future concept of MIS in rhinology and contactless surgery, as suggested by some of our colleagues, such as: a) computational fluid dynamics ("the set of digital imaging and communications in medicine images can be analyzed, e.g., pressure and flow rates in each nostril), so the final result of the surgeon is evaluated not only based on the patient's feeling but also on airflow characteristics"; and b) worldwide accepted training of future surgeons per viam revolutionary techniques/advances in VR.

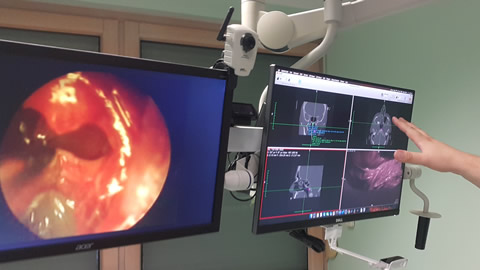

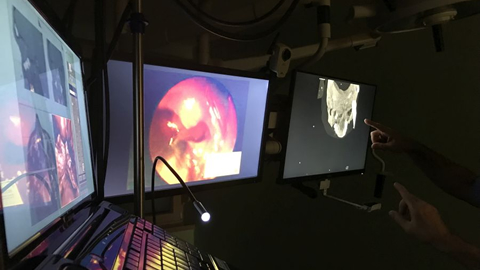

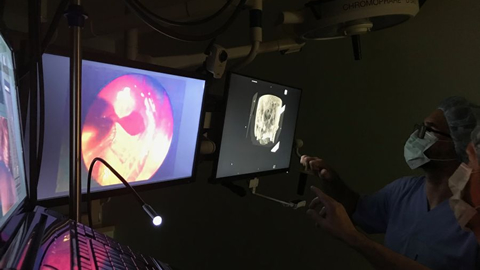

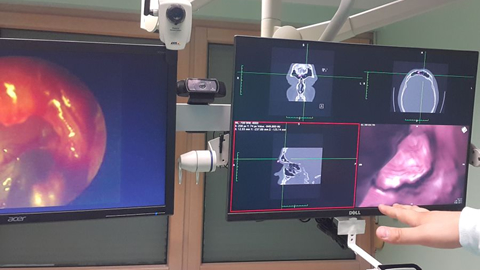

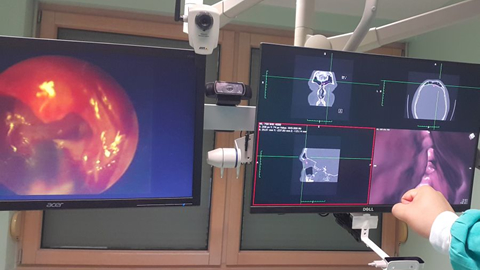

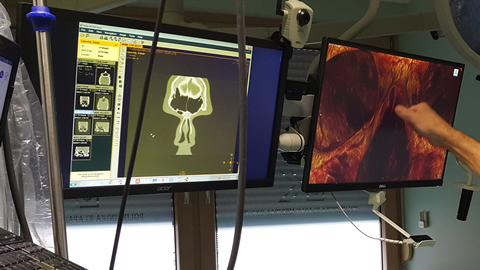

Virtual endoscopy 1

Virtual endoscopy: any surgeon is concentrated on the surgery being performed, and after he makes a decision to use VE, he doesn't think about the hand position which should be used when entering the active area (closed fist, indicating for inactive state). Osirix/Leap Motion/Bitmedix plug-in 3D-navigation surgery in rhinology (with "commands in the air") supported by virtual endoscopy and virtual surgery. Navigating through narrow pathways in VE, we noticed that the camera can stray into the tissue. Following that, getting back on track seemed hard, sometimes even impossible without going back to the starting position, as seen (in the test phase, during intraoperative analysis) in the operating room in the surgical department of the Klapan Medical Group Polyclinic).

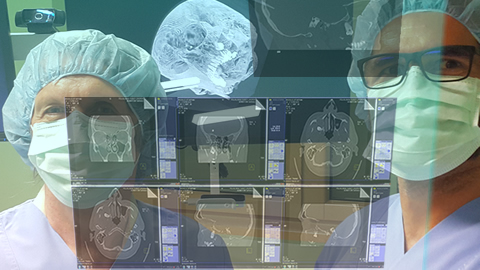

Virtual endoscopy 2

Virtual endoscopy: navigation endoscopic sinus surgery with virtual endoscopy (VE), virtual surgery (VS) as seen (in the test phase, in the operating room) in the surgical department of the Klapan Medical Group Polyclinic; using this approach, the surgeon can use swipe-like gestures for positioning which are more natural to humans than sign gestures. In many cases, when entering sensor active area, the surgeon would keep an open hand (active state) which mostly resulted with sudden change in position and loosing orientation in space.

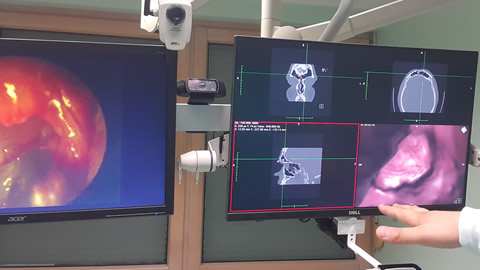

Virtual endoscopy 3

Preoperative virtual endoscopy: we integrate the speech recognition as a VC solution, in our a original way, which is not comparable with some projects which already exist on the market. There is a distinction between the interpretation of gestures in 3DVR and VE because of a different manner of movements while navigating throughout the virtual space

Telemedicine remote education in the field of contactless virtual endoscopy/surgery

Advantages of VE (virtual endoscope), tele-VE and/or tele-contactless VE are that there are no restrictions on the movement of VE, it avoids insertion of an instrument into a natural body opening or minimally invasive opening, and requires no hospitalization. In our virtual endoscopy, navigation endoscopic sinus surgery with virtual endoscopy and in contactless surgery we chose gestures that people can easily correlate with natural movements while interacting with real-world objects, making navigation as fast as possible, while maintaining precision and shortening learning time.

Some recent studies show that physicians are more likely to make errors during their first several to few dozen surgical procedures. Advantages of computer simulations are that the procedures can be repeated many times with no damage to virtual body, virtual body does not have to be dead - many functions of living body can be simulated for realistic visualizations, and organs can be made transparent and modeled. The trainee may be warned of any mistakes in the surgical procedure using a multimedia-based context-sensitive help. Using the computer recorded co-ordinate shifts of 3D digitalizer during the telesurgery procedure, an animated image of the course of surgery can be created in the form of navigation, i.e. the real patient operative field fly-through, as it was done from the very begining (from 1998) in our tele-CAS-contactlesss surgeries.

Virtual endoscopy

Virtual endoscopy: the fly-through techniques, which combine the features of endoscopic viewing and cross-sectional volumetric imaging, provide more effective and safer endoscopic procedures (marker-based VR-simulation), and use the corresponding cross-sectional image or multiplanar reconstructions to evaluate anatomical structures during the operation (3D-navigation&augmented reality in the OR). Osirix/Leap motion/Bitmedix plug-in - when choosing a ROI or a checkpoint to be activated, the user should be presented with a clear 3DVR or VE view with an unobtrusive list of ROIs or checkpoints with associate VCs.

Virtual endoscopy LM setup

Using Virtual endoscopy LM system, it is provided active tracking for maximum accuracy, two-way communication between the handpiece and the camera , which enables the surgeon to control the SW remotely from the handpiece, and no touch-screens or additional personnel required to run the system. Our Bitmedix team defined different types of gestures for the 3DVR and for the VE which enable navigation through virtual 3D space, adjusting viewing angle and camera position, using only one hand.

"Contactless surgery"

"Contactless surgery" makes a highly potent tool available to the physician-surgeon; if used in a rational and proper way, it can change substantially the outcomes of until now complicated and serious operative procedures, relying on the possibility to assess their real feasibility. There is an opportunity to choose from a number of different approaches and performance in future operations, and finally to select the safest, relatively fast and successful approach to the operative field. Just imagine that during the operation, the rhinosurgeon can simply 'navigate' through the oroantral fistula canal (which has been inconceivable to date), perform 3D-assessment of its relation with the roots of other teeth within the maxillary bone, visualize the fistula ostium mucosa in maxillary sinus and its relation to the rest of sinus mucosa, 'enter' the cyst and observe its extent, composition of its content, assess the medically justified degree and range of pathologic cystic tissue removal while preserving the healthy and other vital anatomic elements of the oroantral region of the patient's head. Performing the multiply repeatable different forms of virtual diagnostics very fast, followed by VE and finally VS, offering the possibility of repeating them endlessly, we can 'copy' the entire operative procedure conceived as performed in the VW of the patient's head anatomy, which will then be 'copied' in the same way in the future real operation on the real patient.

Functions and parameters

Some of the functions and parameters of our contactless surgery could be:

• currently active context (3DVR or VE) and the possibility to switch between them,

• a list of ROIs defined before the operation,

• parameters for each ROI such as region color,

• transparency and activation/deactivation from current context,

• a list of VE travel paths which contains a set of belonging checkpoints,

• crop function activation, and

• other contextual OsiriX application functions and parameters.

Interactive display of correlated 2D and 3D data in the four-window format may assist the endoscopist in performing various image guided procedures. In comparison with real endoscopy, the VE is completely noninvasive. In the nasal or sinus cavity, VE can clearly display the anatomic structure of the paranasal sinuses, nasopharyngeal cavity and upper respiratory tract, revealing damage to the sinus wall caused by a bone tumor or fracture, and use the corresponding cross-sectional image or multiplanar reconstructions to evaluate structures outside the sinus cavity. A major disadvantage of VE is its inability to make an impact on OR performance, as well as considerable time consumption to evaluate the mucosal surface, or to provide a realistic illustration of the various pathologic findings in cases with highly obstructive sinonasal disease.

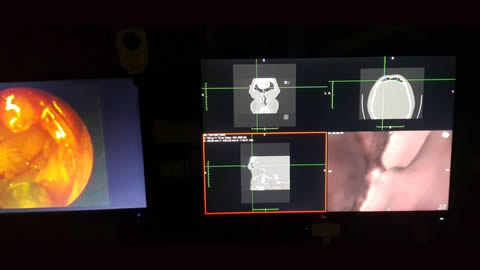

Teleconsultation 1

In otorhinolaryngology, research in the area of 2-D (two dimensional) and 3-D image analysis, visualization, tissue modelling, and human-machine interfaces provides scientific expertise necessary for developing successful 3D-CAS (computer assisted surgery), Tele-3D-CAS, and VR applications. Such an impression of immersion can be realized in any medical institution using advanced computers and computer networks that are required for interaction between a person and a remote environment, with the goal of realizing tele-presence. The basic requirement, such as in otorhinolaryngology, resulting from the above mentioned needs refers to the use of a computer system for visualization of anatomic 3D-structures and integral operative field to be operated on. To understand the idea of 3D-CAS/VR it is necessary to recognize that the perception of surrounding world created in our brain is based on information coming from the human senses and with the help of a knowledge that is stored in our brain. The usual definition says that the impression of being present in a virtual environment, such as virtual endoscopy (VE) of the patient’s head, that does not exist in reality is called VR. The user/physician, has impression of presence in the virtual world and can navigate through it and manipulate virtual objects. A 3D-CAS/VR system may be designed in such a way that the user/physician, is completely immersed in the virtual environment.

Teleconsultation 2

Preoperative Preparation

The computer system has to store in its memory a 3-D model of the virtual environment. In that case a real-time VR system will update the 3-D graphical visualization as the user moves, so that up-to-date visualization is always shown on the computer screen. Such a preoperative preparation can be applied in a variety of program systems that can be transmitted to distant collaborating radiologic and surgical work sites for preoperative consultation as well as during the operative procedure in real time (telesurgery).

The real-time requirement means that the simulation must be able to follow the actions of the user that may be moving in the virtual environment. The computer system must also store in its memory a 3D model of the virtual environment. In that case, a real-time virtual reality system will update the 3D graphic visualization as the user moves, so that up-to-date visualization is always shown on the computer screen. For realistic simulations it is necessary for the computer to generate at least 30-40 such images per second, which imposes strong requirements upon the computer processing power. Virtual reality systems may be used for visualization of anatomic structures, virtual endoscopy, 3D-image-guided surgery, as well as of the pathology and/or anatomy during therapy planning.

Teleconsultation 3

Tele-using VE-models supported by LM-“commands in the air”, we simulated the endoscope tip penetration through the nose and paranasal sinuses which cannot be explored by the existing endoscopic methods,i.e. using only the “standard FESS, as it cvan be done in NESS/tele-NESS (/navigation endoscopic sinus surgery) with virtual endoscopy (VE) as well with virtual surgery (VS). While navigating through the narrow pathways in 3D/VE preoperatively, we noticed that the camera could stray into the tissue. Following this, getting back on the track seemed hard, sometimes even impossible without going back to the starting position endoscopic ” approach (we obtained relative relationships of the borderline areas that are important for the final diagnosis of pathologic conditions in the region).

VR has many applications in CA-surgery. Using the computer recorded co-ordinate shifts of 3D digitalizer during the telesurgery procedure, an animated image of the course of surgery can be created in the form of navigation, i.e. the real patient operative field fly-through, as it has been done from the very beginning (since 1998) in our CA-telesurgeries. Upon the completion of the CAS and/or tele-CAS-operation, the surgeon compares the preoperative and postoperative images and models of the operative field, and studies video records of the procedure itself. In otorhinolaryngology, especially in rhinology, research in the area of 2D and 3D image analysis, visualization, tissue modeling, and human-machine interfaces provides scientific expertise necessary for developing successful VR applications. The basic requirement in rhinology resulting from the above mentioned refers to the use of a computer system for visualization of the anatomic 3D-structures and integral operative field to be operated on.

Teleconsultation 4

Computer Assisted Telesurgery

An example of 3D computer-assisted surgery of the nose and paranasal sinuses (3D-C-FESS) with simulation and planning of the course of operation (VE)

Tele-operation is a special case of tele-presence where in addition to illusion of presence at a remote location operator also has the ability to perform certain actions or manipulations at the remote site. In this way it is possible to perform various actions in distance locations, where it is not possible to go due to a danger, prohibitive price, or a large distance. Realization of VR systems requires software (design of VE) for running VR applications in real-time. Computer technologies allow for computer assisted surgery to be performed at distance. The basic form of telesurgery can be realized by using audio and video consultations during the procedure.

Virtual endoscopy (VE) is a new method of diagnosis using computer processing of 3D image datasets (such as 2D-multislice computed tomography (MSCT) and/or magnetic resonance imaging (MRI) scans) to provide simulated visualization of patient specific organs similar or equivalent to those produced by standard endoscopic procedures. Visualization avoids the risks associated with real endoscopy, and when used prior to performing an actual endoscopic examination, it can minimize procedural difficulties, especially for endoscopists on training, which was proved in Croatian 3D computer assisted-functional endoscopic sinus surgery (3D-CA-FESS) performed on June 3, 1994, our first Croatian Tele-3D-CA-FESS in October, 1998, as well as in our ongoing surgical activities.

Teleconsultation 5

Computer Assisted Diagnosis and Surgery

Virtual endoscopy and tele-virtual endoscopy overcomes some difficulties of conventional endoscopy. In classical endoscopy an endoscope is inserted into the patient to examine the internal organs or spaces. The physician uses an optical system to view interior of the body

3D-CAS systems can be used to aid delivery of surgical procedures. The system fuses computer-generated images with endoscopic image in real time. Surgical instruments have 3-D tracking sensors and the instrument position is superimposed on the video image and CT image of the patient head . The system also provides guidance according to the surgically planned trajectory. The advantages of the system include reduced time for procedure, reduced training time, greater accuracy, and reduced trauma for the patient . The use of 3D spatial model of the operative field during the surgery has also pointed to the need of positioning the tip of the instrument (endoscope, forceps, etc.) within the computer model.

Teleconsultation 6

Virtual endoscopy of human head in different projections. Visualization of all paranasal sinuses and surrounding regions from different 3D aspects.

Modern Tele-3D-computer assisted surgery (Tele-3D-CAS) should enable less experienced surgeons to perform critical surgeries using guidance and assistance from a remote, experienced surgeon. In telesurgery, more than two locations can be involved; thus less experienced surgeon can be assisted by one, two or more experienced surgeons, depending on the complexity of the surgical procedure. In our activities, 3D-CAS and Tele-3D-CAS also provide the transfer of computer data (images, 3D-models) in real time during the surgery and, in parallel, of the encoded live video signals. Through this network, the two encoded live video signals from the endocamera and OR camera have to be transferred to the remote locations involved in the telesurgery/consultation procedure.

Comparative analysis of 3D anatomic models with intraoperative findings, in any kind of CAS and/or CA-telesurgery, shows the 3D volume rendering image to be very good, actually a visualization standard that allows imaging likewise the real intraoperative anatomy. The mentioned technologies represent a basis for realistic simulations that are useful in many areas of human activity (including medicine), and can create an impression of immersion of a physician in a non-existing, virtual environment . Such an impression of immersion can be realized in any medical institution using advanced computers and computer networks that are required for interaction between a person and a remote environment, with the goal of realizing tele-presence.

Teleconsultation 7

Tele-conclusions

Different VR applications have become a routine pre- and intra-operative procedure in human medicine, as we have already shown in our surgical activities in the last two decades, providing a highly useful and informative visualization of the regions of interest, thus bringing advancement in defining the geometric information on anatomic contours of 3D-human head-models by the transfer of so-called “image pixels” to “contour pixels”.

The physician has an impression of the presence in the virtual world and can navigate through it and manipulate virtual objects. A 3D-CAS/VR and tele-3D-CAS system may be designed in such a way that the physician is completely immersed in the virtual environment or tele-virtual-environment. We can conclude that the basic purpose of such medical and tele-medical systems is to create a sense of physical presence at a remote location. Tele-presence is achieved by generating sensory stimulus, so that the operator has an illusion of being present at a location distant from the location of physical presence. A tele-presence system extends the operator’s sensory-motor facilities and problem solving abilities to a remote environment. A tele-operation system enables operation at a distant remote site by providing the local operator with necessary sensory information to simulate operator’s presence at the remote location. Realization of VR systems requires appropriate software for running VR applications in real time. Simulations in real-time require powerful computers that can perform real-time computations required for generation of visual displays.

VE or fly-through methods, which combine the features of endoscopic viewing and cross-sectional volumetric imaging, provided more effective and safer endoscopic procedures in the diagnosis and management of our patients, especially preoperatively, as already discussed

Teleconsultation 8

We would like to underline that ordinary, and occasionally even expert surgeons may need some additional intraoperative consultation (or VE/3D support), for example, when anatomical markers are lacking in the operative field due to trauma (war injuries) or massive polypous lesions/normal mucosa consumption, bleeding, etc. Now, imagine that we can substitute artificially generated sensations for the real standard daily information received by our senses. In this case, the perception system in humans could be deceived, creating an impression of another 'external' world around the man (e.g., 3D navigation surgery). In this way, we could replace the true reality with the simulated reality that enables precise, safer and faster diagnosis, as well as surgery. All systems of simulated reality share the ability to offer the user to move and act within the apparent worlds instead of the real world.

Using CA-surgery and/or tele-CA-system, the possibility of exact preoperative, noninvasive visualization of the spatial relationships of anatomic and pathologic structures, including extremely fragile ones, size and extent of pathologic process, and to precisely predict the course of surgical procedure, definitely allows the surgeon in any 3D-CAS or Tele-CAS procedure to achieve considerable advantage in the preoperative examination of the patient, and to reduce the risk of intraoperative complications, all this by use of VR or diagnosis (the surgeon and/or telesurgeon operates in the “virtual world”) . With the use of 3D model, the surgeon's orientation in the operative field is considerably facilitated, including “patient location” and "location of the tele-expert consultant”.

Telemed-education 1

Using intraoperative records, animated images of the real tele-procedure performed can be designed. Tele-VE/VS offers the possibility of preoperative planning in rhinology. The intraoperative use of computer in real time requires development of appropriate hardware and software to connect medical instrumentarium with the computer, and to operate the computer by thus connected instrumentarium and sophisticated multimedia interfaces.

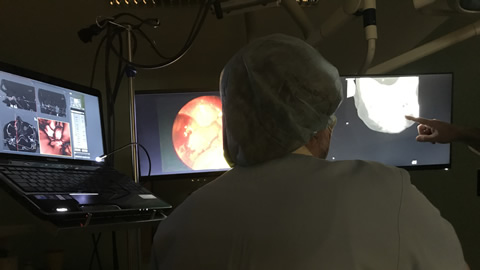

Education 1

EDU: navigation endoscopic sinus surgery with virtual endoscopy (VE), virtual surgery (VS and LM; with the aim of reducing positioning time within VE, we also suggest using predefined points on paths (checkpoints) that can be used to relocate the camera, and the surgeon should have the option to look around and change camera orientation, while camera position cannot leave the predefined path. Osirix/Leap motion/Bitmedix plug-in for Osirix - the application of DICOM-image viewer in the OR17, without any physical contact with diagnostic imaging testing, medical or IT apparatus, imposes a brand new standard in the development of this century’s surgery which enables the surgeon to, in real time, posses and control all medical patient data, as well as all digital imaging diagnostic testing, which cannot be said for the usual and until now most common “analysis” of medical imaging contents, as seen (in the test phase, during postoperative analysis) in the operating room in the surgical department of the Klapan Medical Group Polyclinic.

Telemed-education 2

Computer Assisted Telesurgery

Tele-operation is a special case of tele-presence where in addition to illusion of presence at a remote location operator also has the ability to perform certain actions or manipulations at the remote site. In this way it is possible to perform various actions in distance locations, where it is not possible to go due to a danger, prohibitive price, or a large distance. Realization of VR systems requires software (design of VE) for running VR applications in real-time. Computer technologies allow for computer assisted surgery to be performed at distance. The basic form of telesurgery can be realized by using audio and video consultations during the procedure.

Telemed-education 3

3D image analysis, tissue modelling, 3D-CAS (3D-computer assisted surgery), tele-3D-CAS, and tele VR (virtual reality) represent a basis for realistic tele-simulations, and can create an impression of immersion of a physician in a non-existing, virtual/televirtual environment. The real-time requirement means that the simulation must be able to follow the actions of the user that may be moving in the virtual environment (VE). The computer system must also store in its memory a 3D model of the virtual environment (such as 3D-CAS models). In that case a real-time VR system will update the 3D graphical visualization as the user moves, so that up-to-date visualization is always shown on the computer screen. Upon the completion of the tele-educaation-operation or similar, the surgeon/teleconsultant compares the preoperative and postoperative images and models of the operative field, and studies video records of the procedure itself.